Artificial Intelligence (AI) is reshaping industries, revolutionizing the way we live and work. However, the energy consumption of AI models has raised significant concerns. This article explores how AI models are becoming more energy efficient, utilizing innovative techniques and metrics. By understanding the energy footprint and future sustainability of AI, we can address the challenges and seize opportunities ahead.

Understanding the Energy Footprint

The increase in AI technologies has led to a larger energy footprint, as training models require significant computations. These computations consume electricity, and the energy demand scales with the size and complexity of the model.

AI models are resource-intensive, needing vast amounts of data to learn effectively. Moreover, running AI algorithms, particularly deep learning models, results in hefty power consumption. Understanding this footprint is crucial since it impacts our carbon emissions.

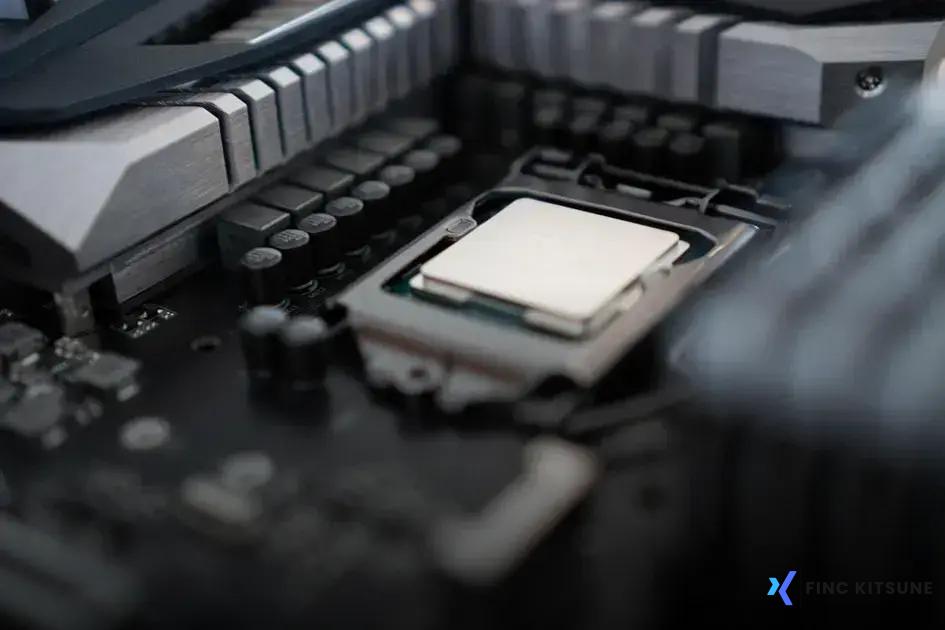

The core of this footprint is driven by the hardware used, which must be powerful enough to handle complicated tasks. Cooling systems required to maintain optimal temperatures further contribute to overall energy consumption.

Analyzing the energy required to train these models versus their utility is necessary to understand their sustainability. By breaking down the processes, businesses can recognize areas needing optimization and reduce excess consumption.

Achieving better energy efficiency involves recognizing where optimizations can occur both in software and hardware environments. Strategies from innovative design to adoption of efficient processors help in minimizing the energy demands associated with AI technologies.

Innovative Techniques for Efficiency

AI models are now employing state-of-the-art techniques to enhance their efficiency without compromising performance. One groundbreaking approach is the use of quantization. This technique involves reducing the precision of the numbers used in calculations, allowing models to run faster and consume less energy, while maintaining accuracy.

Moreover, pruning is gaining traction. It involves removing redundant neurons and connections in the networks, which cuts down on energy consumption. By trimming the unnecessary parts, AI models become more efficient and easier to deploy on devices with limited resources.

An emerging technique is neural architecture search (NAS). NAS automates the design of neural networks, leading to the development of architectures that are both effective and energy-efficient. By exploring the vast space of potential architectures, NAS identifies the ones most suitable for energy conservation.

Another innovative method includes utilizing low-power hardware accelerators. These specialized chips are designed to run AI models more efficiently compared to traditional processors, leading to significant reductions in energy use.

Finally, the integration of multi-task learning reduces energy consumption by enabling a single AI model to perform multiple tasks simultaneously. This leads to less redundancy and more efficient use of computational resources, further promoting sustainability.

Energy Efficiency Metrics

In the quest for more sustainable AI, understanding Energy Efficiency Metrics is crucial. These metrics help in evaluating the energy consumption of AI models, allowing for targeted improvements. Various metrics exist, including Floating Point Operations per Second (FLOPS), Energy Per Operation, and Carbon Emission Intensity. Each metric provides unique insights into how resources are utilized during AI model training and deployment.

FLOPS measures the computational speed, indicating how many operations a system can perform per second. While efficiency in FLOPS suggests good performance, it doesn’t fully capture energy consumption. Energy Per Operation, on the other hand, assesses the energy required to perform a single operation, offering a more direct measure of power efficiency.

Furthermore, tracking Carbon Emission Intensity provides insight into the environmental impact of training and running AI models. This metric calculates the emissions generated per unit of energy consumed, encouraging the use of renewable energy sources. Analyzing these metrics helps in designing AI systems that not only perform efficiently but also align with sustainability goals.

To enhance energy efficiency, researchers are optimizing algorithms and hardware. Efforts are also underway to improve data center efficiency, as they are a significant contributor to an AI model’s overall energy use. Comparisons of various AI approaches under these metrics reveal advancements and areas needing improvement. This metric-based scrutiny is vital for driving innovations aimed at reducing the ecological footprint of AI technologies.

Future of AI and Sustainability

Artificial intelligence is moving towards a more sustainable future by reducing its energy consumption. AI models, once known for their high energy demands, are now being revamped with energy efficiency in mind. Advances in AI are focused on minimizing computational costs, thus helping in the global sustainability efforts.

One way AI contributes to sustainability is through optimized algorithms that require less energy and resources. New architectures are being developed which prioritize low energy consumption without sacrificing performance. This innovation is crucial as AI expands into various industries where power efficiency is a priority.

The adoption of renewable energy sources for powering data centers is another significant stride. By utilizing solar or wind energies, AI models’ carbon footprints are significantly reduced, promoting a greener world.

Energy-efficient AI plays a pivotal role in creating sustainable solutions, leveraging machine learning to predict and manage energy usage more effectively. This transformation is paving the way for AI technologies that align closely with environmental goals.

Challenges and Opportunities Ahead

The quest for making AI models more energy-efficient presents unique challenges and promising opportunities. One of the primary challenges is balancing performance with energy consumption. AI models require substantial computational power, often resulting in significant energy use. However, as AI continues to evolve, opportunities to enhance energy efficiency emerge.

Research and development in optimization algorithms are paving the way. These algorithms aim to reduce the complexity of models without sacrificing accuracy. This approach not only reduces energy consumption but also shortens training times.

Another opportunity lies in hardware advancements. Energy-efficient processors and accelerators are becoming integral to AI infrastructure. These technologies are designed to execute more operations per watt, which significantly lowers energy costs and improves sustainability.

Moreover, embracing renewable energy sources to power data centers can mitigate the environmental impact of AI systems. By combining these sustainable practices, the AI industry can address energy consumption challenges effectively.

As AI models continue to grow in complexity, the need for collaborative strategies is evident. Industry leaders and researchers must work together to establish standards and frameworks that promote sustainable AI development.

Japanese-Inspired Wooden Furniture for Tranquil Interiors

Japanese-Inspired Wooden Furniture for Tranquil Interiors  The Future of Container Technology Beyond Docker: What’s Next?

The Future of Container Technology Beyond Docker: What’s Next?  How to Monitor API Performance at Scale Effectively

How to Monitor API Performance at Scale Effectively